Argo meta-data#

Index of profiles#

Since the Argo measurements dataset is quite complex, it comes with a collection of index files, or lookup tables with meta data. These index help you determine what you can expect before retrieving the full set of measurements.

argopy provides two methods to work with Argo index files: one is high-level and works like the data fetcher, the other is low-level and works like a “store”.

Fetcher: High-level Argo index access#

argopy has a specific fetcher for index files:

In [1]: from argopy import IndexFetcher as ArgoIndexFetcher

You can use the Index fetcher with the region or float access points, similarly to data fetching:

In [2]: idx = ArgoIndexFetcher(src='gdac').float(2901623).load()

In [3]: idx.index

Out[3]:

file ... profiler

0 nmdis/2901623/profiles/R2901623_000.nc ... PROVOR float with SBE conductivity sensor

1 nmdis/2901623/profiles/R2901623_000D.nc ... PROVOR float with SBE conductivity sensor

2 nmdis/2901623/profiles/R2901623_001.nc ... PROVOR float with SBE conductivity sensor

3 nmdis/2901623/profiles/R2901623_002.nc ... PROVOR float with SBE conductivity sensor

4 nmdis/2901623/profiles/R2901623_003.nc ... PROVOR float with SBE conductivity sensor

.. ... ... ...

93 nmdis/2901623/profiles/R2901623_092.nc ... PROVOR float with SBE conductivity sensor

94 nmdis/2901623/profiles/R2901623_093.nc ... PROVOR float with SBE conductivity sensor

95 nmdis/2901623/profiles/R2901623_094.nc ... PROVOR float with SBE conductivity sensor

96 nmdis/2901623/profiles/R2901623_095.nc ... PROVOR float with SBE conductivity sensor

97 nmdis/2901623/profiles/R2901623_096.nc ... PROVOR float with SBE conductivity sensor

[98 rows x 12 columns]

Alternatively, you can use argopy.IndexFetcher.to_dataframe():

In [4]: idx = ArgoIndexFetcher(src='gdac').float(2901623)

In [5]: df = idx.to_dataframe()

The difference is that with the load method, data are stored in memory and not fetched on every call to the index attribute.

The index fetcher has pretty much the same methods than the data fetchers. You can check them all here: argopy.fetchers.ArgoIndexFetcher.

Store: Low-level Argo Index access#

The IndexFetcher shown above is a user-friendly layer on top of our internal Argo index file store. But if you are familiar with Argo index files and/or cares about performances, you may be interested in using directly the Argo index store ArgoIndex.

If Pyarrow is installed, this store will rely on pyarrow.Table as internal storage format for the index, otherwise it will fall back on pandas.DataFrame. Loading the full Argo profile index takes about 2/3 secs with Pyarrow, while it can take up to 6/7 secs with Pandas.

All index store methods and properties are documented in ArgoIndex.

Index file supported#

The table below summarize the argopy support status of all Argo index files:

Index file |

Supported |

|

|---|---|---|

Profile |

ar_index_global_prof.txt |

✅ |

Synthetic-Profile |

argo_synthetic-profile_index.txt |

✅ |

Bio-Profile |

argo_bio-profile_index.txt |

✅ |

Trajectory |

ar_index_global_traj.txt |

❌ |

Bio-Trajectory |

argo_bio-traj_index.txt |

❌ |

Metadata |

ar_index_global_meta.txt |

❌ |

Technical |

ar_index_global_tech.txt |

❌ |

Greylist |

ar_greylist.txt |

❌ |

Index files support can be added on demand. Click here to raise an issue if you’d like to access other index files.

Usage#

You create an index store with default or custom options:

In [6]: from argopy import ArgoIndex

In [7]: idx = ArgoIndex()

# or:

# ArgoIndex(index_file="argo_bio-profile_index.txt")

# ArgoIndex(index_file="bgc-s") # can use keyword instead of file name: core, bgc-b, bgc-b

# ArgoIndex(host="ftp://ftp.ifremer.fr/ifremer/argo")

# ArgoIndex(host="https://data-argo.ifremer.fr", index_file="core")

# ArgoIndex(host="https://data-argo.ifremer.fr", index_file="ar_index_global_prof.txt", cache=True)

You can then trigger loading of the index content:

In [8]: idx.load() # Load the full index in memory

Out[8]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: ar_index_global_prof.txt

Convention: ar_index_global_prof (Profile directory file of the Argo GDAC)

Loaded: True (2963627 records)

Searched: False

Here is the list of methods and properties of the full index:

idx.load(nrows=12) # Only load the first N rows of the index

idx.N_RECORDS # Shortcut for length of 1st dimension of the index array

idx.to_dataframe(index=True) # Convert index to user-friendly :class:`pandas.DataFrame`

idx.to_dataframe(index=True, nrows=2) # Only returns the first nrows of the index

idx.index # internal storage structure of the full index (:class:`pyarrow.Table` or :class:`pandas.DataFrame`)

idx.uri_full_index # List of absolute path to files from the full index table column 'file'

They are several methods to search the index, for instance:

In [9]: idx.search_lat_lon_tim([-60, -55, 40., 45., '2007-08-01', '2007-09-01'])

Out[9]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: ar_index_global_prof.txt

Convention: ar_index_global_prof (Profile directory file of the Argo GDAC)

Loaded: True (2963627 records)

Searched: True (12 matches, 0.0004%)

Here the list of all methods to search the index:

idx.search_wmo(1901393)

idx.search_cyc(1)

idx.search_wmo_cyc(1901393, [1,12])

idx.search_tim([-60, -55, 40., 45., '2007-08-01', '2007-09-01']) # Take an index BOX definition, only time is used

idx.search_lat_lon([-60, -55, 40., 45., '2007-08-01', '2007-09-01']) # Take an index BOX definition, only lat/lon is used

idx.search_lat_lon_tim([-60, -55, 40., 45., '2007-08-01', '2007-09-01']) # Take an index BOX definition

idx.search_params(['C1PHASE_DOXY', 'DOWNWELLING_PAR']) # Only for BGC profile index

idx.search_parameter_data_mode({'BBP700': 'D'}) # Only for BGC profile index

And finally the list of methods and properties for search results:

idx.N_MATCH # Shortcut for length of 1st dimension of the search results array

idx.to_dataframe() # Convert search results to user-friendly :class:`pandas.DataFrame`

idx.to_dataframe(nrows=2) # Only returns the first nrows of the search results

idx.to_indexfile("search_index.txt") # Export search results to Argo standard index file

idx.search # Internal table with search results

idx.uri # List of absolute path to files from the search results table column 'file'

Usage with bgc index#

The argopy index store supports the Bio and Synthetic Profile directory files:

In [10]: idx = ArgoIndex(index_file="argo_bio-profile_index.txt").load()

# idx = ArgoIndex(index_file="argo_synthetic-profile_index.txt").load()

In [11]: idx

Out[11]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: argo_bio-profile_index.txt

Convention: argo_bio-profile_index (Bio-Profile directory file of the Argo GDAC)

Loaded: True (311448 records)

Searched: False

Hint

In order to load one BGC-Argo profile index, you can use either bgc-b or bgc-s keywords to load the argo_bio-profile_index.txt or argo_synthetic-profile_index.txt index files.

All methods presented above are valid with BGC index, but a BGC index store comes with additional search possibilities for parameters and parameter data modes.

Two specific index variables are only available with BGC-Argo index files: PARAMETERS and PARAMETER_DATA_MODE. We thus implemented the ArgoIndex.search_params() and ArgoIndex.search_parameter_data_mode() methods. These method allow to search for (i) profiles with one or more specific parameters and (ii) profiles with parameters in one or more specific data modes.

Syntax for ArgoIndex.search_params()

In [12]: from argopy import ArgoIndex

In [13]: idx = ArgoIndex(index_file='bgc-s').load()

In [14]: idx

Out[14]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: argo_synthetic-profile_index.txt

Convention: argo_synthetic-profile_index (Synthetic-Profile directory file of the Argo GDAC)

Loaded: True (310195 records)

Searched: False

You can search for one parameter:

In [15]: idx.search_params('DOXY')

Out[15]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: argo_synthetic-profile_index.txt

Convention: argo_synthetic-profile_index (Synthetic-Profile directory file of the Argo GDAC)

Loaded: True (310195 records)

Searched: True (296750 matches, 95.6656%)

Or you can search for several parameters:

In [16]: idx.search_params(['DOXY', 'CDOM'])

Out[16]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: argo_synthetic-profile_index.txt

Convention: argo_synthetic-profile_index (Synthetic-Profile directory file of the Argo GDAC)

Loaded: True (310195 records)

Searched: True (48569 matches, 15.6576%)

Note that a multiple parameters search will return profiles with all parameters. To search for profiles with any of the parameters, use:

In [17]: idx.search_params(['DOXY', 'CDOM'], logical='or')

Out[17]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: argo_synthetic-profile_index.txt

Convention: argo_synthetic-profile_index (Synthetic-Profile directory file of the Argo GDAC)

Loaded: True (310195 records)

Searched: True (308944 matches, 99.5967%)

Syntax for ArgoIndex.search_parameter_data_mode()

In [18]: from argopy import ArgoIndex

In [19]: idx = ArgoIndex(index_file='bgc-b').load()

In [20]: idx

Out[20]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: argo_bio-profile_index.txt

Convention: argo_bio-profile_index (Bio-Profile directory file of the Argo GDAC)

Loaded: True (311448 records)

Searched: False

You can search one mode for a single parameter:

In [21]: idx.search_parameter_data_mode({'BBP700': 'D'})

Out[21]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: argo_bio-profile_index.txt

Convention: argo_bio-profile_index (Bio-Profile directory file of the Argo GDAC)

Loaded: True (311448 records)

Searched: True (17529 matches, 5.6282%)

You can search several modes for a single parameter:

In [22]: idx.search_parameter_data_mode({'DOXY': ['R', 'A']})

Out[22]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: argo_bio-profile_index.txt

Convention: argo_bio-profile_index (Bio-Profile directory file of the Argo GDAC)

Loaded: True (311448 records)

Searched: True (104120 matches, 33.4309%)

You can search several modes for several parameters:

In [23]: idx.search_parameter_data_mode({'BBP700': 'D', 'DOXY': 'D'}, logical='and')

Out[23]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: argo_bio-profile_index.txt

Convention: argo_bio-profile_index (Bio-Profile directory file of the Argo GDAC)

Loaded: True (311448 records)

Searched: True (11292 matches, 3.6256%)

And mix all of these as you wish:

In [24]: idx.search_parameter_data_mode({'BBP700': ['R', 'A'], 'DOXY': 'D'}, logical='or')

Out[24]:

<argoindex.pandas>

Host: https://data-argo.ifremer.fr

Index: argo_bio-profile_index.txt

Convention: argo_bio-profile_index (Bio-Profile directory file of the Argo GDAC)

Loaded: True (311448 records)

Searched: True (234156 matches, 75.1830%)

Reference tables#

The Argo netcdf format is strict and based on a collection of variables fully documented and conventioned. All reference tables can be found in the Argo user manual.

However, a machine-to-machine access to these tables is often required. This is possible thanks to the work of the Argo Vocabulary Task Team (AVTT) that is a team of people responsible for the NVS collections under the Argo Data Management Team governance.

Note

The GitHub organization hosting the AVTT is the ‘NERC Vocabulary Server (NVS)’, aka ‘nvs-vocabs’. This holds a list of NVS collection-specific GitHub repositories. Each Argo GitHub repository is called after its corresponding collection ID (e.g. R01, RR2, R03 etc.). The current list is given here.

The management of issues related to vocabularies managed by the Argo Data Management Team is done on this repository.

argopy provides the utility class ArgoNVSReferenceTables to easily fetch and get access to all Argo reference tables. If you already know the name of the reference table you want to retrieve, you can simply get it like this:

In [25]: from argopy import ArgoNVSReferenceTables

In [26]: NVS = ArgoNVSReferenceTables()

In [27]: NVS.tbl('R01')

Out[27]:

altLabel ... id

0 BPROF ... http://vocab.nerc.ac.uk/collection/R01/current...

1 BTRAJ ... http://vocab.nerc.ac.uk/collection/R01/current...

2 META ... http://vocab.nerc.ac.uk/collection/R01/current...

3 MPROF ... http://vocab.nerc.ac.uk/collection/R01/current...

4 MTRAJ ... http://vocab.nerc.ac.uk/collection/R01/current...

5 PROF ... http://vocab.nerc.ac.uk/collection/R01/current...

6 SPROF ... http://vocab.nerc.ac.uk/collection/R01/current...

7 TECH ... http://vocab.nerc.ac.uk/collection/R01/current...

8 TRAJ ... http://vocab.nerc.ac.uk/collection/R01/current...

[9 rows x 5 columns]

The reference table is returned as a pandas.DataFrame. If you want the exact name of this table:

In [28]: NVS.tbl_name('R01')

Out[28]:

('DATA_TYPE',

'Terms describing the type of data contained in an Argo netCDF file. Argo netCDF variable DATA_TYPE is populated by R01 prefLabel.',

'http://vocab.nerc.ac.uk/collection/R01/current/')

If you don’t know the reference table ID, you can search for a word in tables title and/or description with the search method:

In [29]: id_list = NVS.search('sensor')

This will return the list of reference table ids matching your search. It can then be used to retrieve table information:

In [30]: [NVS.tbl_name(id) for id in id_list]

Out[30]:

[('SENSOR',

'Terms describing sensor types mounted on Argo floats. Argo netCDF variable SENSOR is populated by R25 altLabel.',

'http://vocab.nerc.ac.uk/collection/R25/current/'),

('SENSOR_MAKER',

'Terms describing developers and manufacturers of sensors mounted on Argo floats. Argo netCDF variable SENSOR_MAKER is populated by R26 altLabel.',

'http://vocab.nerc.ac.uk/collection/R26/current/'),

('SENSOR_MODEL',

'Terms listing models of sensors mounted on Argo floats. Note: avoid using the manufacturer name and sensor firmware version in new entries when possible. Argo netCDF variable SENSOR_MODEL is populated by R27 altLabel.',

'http://vocab.nerc.ac.uk/collection/R27/current/')]

The full list of all available tables is given by the ArgoNVSReferenceTables.all_tbl_name() property. It will return a dictionary with table IDs as key and table name, definition and NVS link as values. Use the ArgoNVSReferenceTables.all_tbl() property to retrieve all tables.

In [31]: NVS.all_tbl_name

Out[31]:

OrderedDict([('R01',

('DATA_TYPE',

'Terms describing the type of data contained in an Argo netCDF file. Argo netCDF variable DATA_TYPE is populated by R01 prefLabel.',

'http://vocab.nerc.ac.uk/collection/R01/current/')),

('R03',

('PARAMETER',

'Terms describing individual measured phenomena, used to mark up sets of data in Argo netCDF arrays. Argo netCDF variables PARAMETER and TRAJECTORY_PARAMETERS are populated by R03 altLabel; R03 altLabel is also used to name netCDF profile files parameter variables <PARAMETER>.',

'http://vocab.nerc.ac.uk/collection/R03/current/')),

('R04',

('DATA_CENTRE_CODES',

'Codes for data centres and institutions handling or managing Argo data. Argo netCDF variable DATA_CENTRE is populated by R04 altLabel.',

'http://vocab.nerc.ac.uk/collection/R04/current/')),

('R05',

('POSITION_ACCURACY',

'Accuracy in latitude and longitude measurements received from the positioning system, grouped by location accuracy classes.',

'http://vocab.nerc.ac.uk/collection/R05/current/')),

('R06',

('DATA_STATE_INDICATOR',

'Processing stage of the data based on the concatenation of processing level and class indicators. Argo netCDF variable DATA_STATE_INDICATOR is populated by R06 altLabel.',

'http://vocab.nerc.ac.uk/collection/R06/current/')),

('R07',

('HISTORY_ACTION',

'Coded history information for each action performed on each profile by a data centre. Argo netCDF variable HISTORY_ACTION is populated by R07 altLabel.',

'http://vocab.nerc.ac.uk/collection/R07/current/')),

('R08',

('ARGO_WMO_INST_TYPE',

"Subset of instrument type codes from the World Meteorological Organization (WMO) Common Code Table C-3 (CCT C-3) 1770, named 'Instrument make and type for water temperature profile measurement with fall rate equation coefficients' and available here: https://library.wmo.int/doc_num.php?explnum_id=11283. Argo netCDF variable WMO_INST_TYPE is populated by R08 altLabel.",

'http://vocab.nerc.ac.uk/collection/R08/current/')),

('R09',

('POSITIONING_SYSTEM',

'List of float location measuring systems. Argo netCDF variable POSITIONING_SYSTEM is populated by R09 altLabel.',

'http://vocab.nerc.ac.uk/collection/R09/current/')),

('R10',

('TRANS_SYSTEM',

'List of telecommunication systems. Argo netCDF variable TRANS_SYSTEM is populated by R10 altLabel.',

'http://vocab.nerc.ac.uk/collection/R10/current/')),

('R11',

('RTQC_TESTID',

'List of real-time quality-control tests and corresponding binary identifiers, used as reference to populate the Argo netCDF HISTORY_QCTEST variable.',

'http://vocab.nerc.ac.uk/collection/R11/current/')),

('R12',

('HISTORY_STEP',

'Data processing step codes for history record. Argo netCDF variable TRANS_SYSTEM is populated by R12 altLabel.',

'http://vocab.nerc.ac.uk/collection/R12/current/')),

('R13',

('OCEAN_CODE',

'Ocean area codes assigned to each profile in the Metadata directory (index) file of the Argo Global Assembly Centre.',

'http://vocab.nerc.ac.uk/collection/R13/current/')),

('R15',

('MEASUREMENT_CODE_ID',

'Measurement code IDs used in Argo Trajectory netCDF files. Argo netCDF variable MEASUREMENT_CODE is populated by R15 altLabel.',

'http://vocab.nerc.ac.uk/collection/R15/current/')),

('R16',

('VERTICAL_SAMPLING_SCHEME',

'Profile sampling schemes and sampling methods. Argo netCDF variable VERTICAL_SAMPLING_SCHEME is populated by R16 altLabel.',

'http://vocab.nerc.ac.uk/collection/R16/current/')),

('R18',

('CONFIG_PARAMETER_NAME',

"List of float configuration settings selected by the float Principal Investigator (PI). Configuration parameters may or may not be reported by the float, and do not constitute float measurements. Configuration parameters selected for a float are stored in the float 'meta.nc' file, under CONFIG_PARAMETER_NAME. Each configuration parameter name has an associated value, stored in CONFIG_PARAMETER_VALUE. Argo netCDF variable CONFIG_PARAMETER_NAME is populated by R18 prefLabel.",

'http://vocab.nerc.ac.uk/collection/R18/current/')),

('R19',

('STATUS',

'Flag scale for values in all Argo netCDF cycle timing variables. Argo netCDF cycle timing variables JULD_<RTV>_STATUS are populated by R19 altLabel.',

'http://vocab.nerc.ac.uk/collection/R19/current/')),

('R20',

('GROUNDED',

'Codes to indicate the best estimate of whether the float touched the ground during a specific cycle. Argo netCDF variable GROUNDED in the Trajectory file is populated by R20 altLabel.',

'http://vocab.nerc.ac.uk/collection/R20/current/')),

('R21',

('REPRESENTATIVE_PARK_PRESSURE_STATUS',

'Argo status flag on the Representative Park Pressure (RPP). Argo netCDF variable REPRESENTATIVE_PARK_PRESSURE_STATUS in the Trajectory file is populated by R21 altLabel.',

'http://vocab.nerc.ac.uk/collection/R21/current/')),

('R22',

('PLATFORM_FAMILY',

'List of platform family/category of Argo floats. Argo netCDF variable PLATFORM_FAMILY is populated by R22 altLabel.',

'http://vocab.nerc.ac.uk/collection/R22/current/')),

('R23',

('PLATFORM_TYPE',

'List of Argo float types. Argo netCDF variable PLATFORM_TYPE is populated by R23 altLabel.',

'http://vocab.nerc.ac.uk/collection/R23/current/')),

('R24',

('PLATFORM_MAKER',

'List of Argo float manufacturers. Argo netCDF variable PLATFORM_MAKER is populated by R24 altLabel.',

'http://vocab.nerc.ac.uk/collection/R24/current/')),

('R25',

('SENSOR',

'Terms describing sensor types mounted on Argo floats. Argo netCDF variable SENSOR is populated by R25 altLabel.',

'http://vocab.nerc.ac.uk/collection/R25/current/')),

('R26',

('SENSOR_MAKER',

'Terms describing developers and manufacturers of sensors mounted on Argo floats. Argo netCDF variable SENSOR_MAKER is populated by R26 altLabel.',

'http://vocab.nerc.ac.uk/collection/R26/current/')),

('R27',

('SENSOR_MODEL',

'Terms listing models of sensors mounted on Argo floats. Note: avoid using the manufacturer name and sensor firmware version in new entries when possible. Argo netCDF variable SENSOR_MODEL is populated by R27 altLabel.',

'http://vocab.nerc.ac.uk/collection/R27/current/')),

('R28',

('CONTROLLER_BOARD_TYPE',

'List of Argo floats controller board types and generations. Argo netCDF variables CONTROLLER_BOARD_TYPE_PRIMARY and, when needed, CONTROLLER_BOARD_TYPE_SECONDARY, are populated by R28 altLabel.',

'http://vocab.nerc.ac.uk/collection/R28/current/')),

('R40',

('PI_NAME',

'List of Principal Investigator (PI) names in charge of Argo floats. Argo netCDF variable PI_NAME is populated by R40 altLabel.',

'http://vocab.nerc.ac.uk/collection/R40/current/')),

('RD2',

('DM_QC_FLAG',

"Quality flag scale for delayed-mode measurements. Argo netCDF variables <PARAMETER>_ADJUSTED_QC in 'D' mode are populated by RD2 altLabel.",

'http://vocab.nerc.ac.uk/collection/RD2/current/')),

('RMC',

('MEASUREMENT_CODE_CATEGORY',

"Categories of trajectory measurement codes listed in NVS collection 'R15'",

'http://vocab.nerc.ac.uk/collection/RMC/current/')),

('RP2',

('PROF_QC_FLAG',

'Quality control flag scale for whole profiles. Argo netCDF variables PROFILE_<PARAMETER>_QC are populated by RP2 altLabel.',

'http://vocab.nerc.ac.uk/collection/RP2/current/')),

('RR2',

('RT_QC_FLAG',

"Quality flag scale for real-time measurements. Argo netCDF variables <PARAMETER>_QC in 'R' mode and <PARAMETER>_ADJUSTED_QC in 'A' mode are populated by RR2 altLabel.",

'http://vocab.nerc.ac.uk/collection/RR2/current/')),

('RTV',

('CYCLE_TIMING_VARIABLE',

"Timing variables representing stages of an Argo float profiling cycle, most of which are associated with a trajectory measurement code ID listed in NVS collection 'R15'. Argo netCDF cycle timing variable names JULD_<RTV>_STATUS are constructed by RTV altLabel.",

'http://vocab.nerc.ac.uk/collection/RTV/current/'))])

Deployment Plan#

It may be useful to be able to retrieve meta-data from Argo deployments. argopy can use the OceanOPS API for metadata access to retrieve these information. The returned deployment plan is a list of all Argo floats ever deployed, together with their deployment location, date, WMO, program, country, float model and current status.

To fetch the Argo deployment plan, argopy provides a dedicated utility class: OceanOPSDeployments that can be used like this:

In [32]: from argopy import OceanOPSDeployments

In [33]: deployment = OceanOPSDeployments()

In [34]: df = deployment.to_dataframe()

---------------------------------------------------------------------------

FileNotFoundError Traceback (most recent call last)

Cell In[34], line 1

----> 1 df = deployment.to_dataframe()

File ~/checkouts/readthedocs.org/user_builds/argopy/checkouts/latest/argopy/related/ocean_ops_deployments.py:359, in OceanOPSDeployments.to_dataframe(self)

352 def to_dataframe(self):

353 """Return the deployment plan as :class:`pandas.DataFrame`

354

355 Returns

356 -------

357 :class:`pandas.DataFrame`

358 """

--> 359 data = self.to_json()

360 if data["total"] == 0:

361 raise DataNotFound("Your search matches no results")

File ~/checkouts/readthedocs.org/user_builds/argopy/checkouts/latest/argopy/related/ocean_ops_deployments.py:349, in OceanOPSDeployments.to_json(self)

347 """Return OceanOPS API request response as a json object"""

348 if self.data is None:

--> 349 self.data = self.fs.open_json(self.uri)

350 return self.data

File ~/checkouts/readthedocs.org/user_builds/argopy/checkouts/latest/argopy/stores/filesystems.py:1228, in httpstore.open_json(self, url, **kwargs)

1216 def open_json(self, url, **kwargs):

1217 """Return a json from an url, or verbose errors

1218

1219 Parameters

(...)

1226

1227 """

-> 1228 data = self.download_url(url)

1229 js = json.loads(data, **kwargs)

1230 if len(js) == 0:

File ~/checkouts/readthedocs.org/user_builds/argopy/checkouts/latest/argopy/stores/filesystems.py:693, in httpstore.download_url(self, url, n_attempt, max_attempt, cat_opts, *args, **kwargs)

690 return data, n_attempt

692 url = self.curateurl(url)

--> 693 data, n = make_request(

694 self.fs,

695 url,

696 n_attempt=n_attempt,

697 max_attempt=max_attempt,

698 cat_opts=cat_opts,

699 )

701 if data is None:

702 raise FileNotFoundError(url)

File ~/checkouts/readthedocs.org/user_builds/argopy/checkouts/latest/argopy/stores/filesystems.py:663, in httpstore.download_url.<locals>.make_request(ffs, url, n_attempt, max_attempt, cat_opts)

661 if n_attempt <= max_attempt:

662 try:

--> 663 data = ffs.cat_file(url, **cat_opts)

664 except aiohttp.ClientResponseError as e:

665 if e.status == 413:

File ~/checkouts/readthedocs.org/user_builds/argopy/envs/latest/lib/python3.8/site-packages/fsspec/asyn.py:118, in sync_wrapper.<locals>.wrapper(*args, **kwargs)

115 @functools.wraps(func)

116 def wrapper(*args, **kwargs):

117 self = obj or args[0]

--> 118 return sync(self.loop, func, *args, **kwargs)

File ~/checkouts/readthedocs.org/user_builds/argopy/envs/latest/lib/python3.8/site-packages/fsspec/asyn.py:103, in sync(loop, func, timeout, *args, **kwargs)

101 raise FSTimeoutError from return_result

102 elif isinstance(return_result, BaseException):

--> 103 raise return_result

104 else:

105 return return_result

File ~/checkouts/readthedocs.org/user_builds/argopy/envs/latest/lib/python3.8/site-packages/fsspec/asyn.py:56, in _runner(event, coro, result, timeout)

54 coro = asyncio.wait_for(coro, timeout=timeout)

55 try:

---> 56 result[0] = await coro

57 except Exception as ex:

58 result[0] = ex

File ~/checkouts/readthedocs.org/user_builds/argopy/envs/latest/lib/python3.8/site-packages/fsspec/implementations/http.py:236, in HTTPFileSystem._cat_file(self, url, start, end, **kwargs)

234 async with session.get(self.encode_url(url), **kw) as r:

235 out = await r.read()

--> 236 self._raise_not_found_for_status(r, url)

237 return out

File ~/checkouts/readthedocs.org/user_builds/argopy/envs/latest/lib/python3.8/site-packages/fsspec/implementations/http.py:218, in HTTPFileSystem._raise_not_found_for_status(self, response, url)

214 """

215 Raises FileNotFoundError for 404s, otherwise uses raise_for_status.

216 """

217 if response.status == 404:

--> 218 raise FileNotFoundError(url)

219 response.raise_for_status()

FileNotFoundError: https://www.ocean-ops.org/api/1/data/platform?exp=[%22networkPtfs.network.name=%27Argo%27%20and%20ptfDepl.deplDate%3E=$var1%22,%20%222024-04-22%2000:00:00%22]&include=%5B%22ref%22,%22ptfDepl.lat%22,%22ptfDepl.lon%22,%22ptfDepl.deplDate%22,%22ptfStatus.id%22,%22ptfStatus.name%22,%22ptfStatus.description%22,%22program.nameShort%22,%22program.country.nameShort%22,%22ptfModel.nameShort%22,%22ptfDepl.noSite%22%5D

In [35]: df

Out[35]:

file ... profiler

0 nmdis/2901623/profiles/R2901623_000.nc ... PROVOR float with SBE conductivity sensor

1 nmdis/2901623/profiles/R2901623_000D.nc ... PROVOR float with SBE conductivity sensor

2 nmdis/2901623/profiles/R2901623_001.nc ... PROVOR float with SBE conductivity sensor

3 nmdis/2901623/profiles/R2901623_002.nc ... PROVOR float with SBE conductivity sensor

4 nmdis/2901623/profiles/R2901623_003.nc ... PROVOR float with SBE conductivity sensor

.. ... ... ...

93 nmdis/2901623/profiles/R2901623_092.nc ... PROVOR float with SBE conductivity sensor

94 nmdis/2901623/profiles/R2901623_093.nc ... PROVOR float with SBE conductivity sensor

95 nmdis/2901623/profiles/R2901623_094.nc ... PROVOR float with SBE conductivity sensor

96 nmdis/2901623/profiles/R2901623_095.nc ... PROVOR float with SBE conductivity sensor

97 nmdis/2901623/profiles/R2901623_096.nc ... PROVOR float with SBE conductivity sensor

[98 rows x 12 columns]

OceanOPSDeployments can also take an index box definition as argument in order to restrict the deployment plan selection to a specific region or period:

deployment = OceanOPSDeployments([-90, 0, 0, 90])

# deployment = OceanOPSDeployments([-20, 0, 42, 51, '2020-01', '2021-01'])

# deployment = OceanOPSDeployments([-180, 180, -90, 90, '2020-01', None])

Note that if the starting date is not provided, it will be set automatically to the current date.

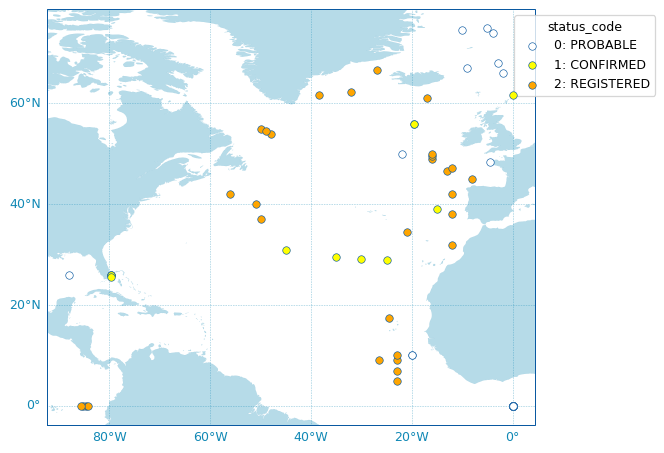

Last, OceanOPSDeployments comes with a plotting method:

fig, ax = deployment.plot_status()

Note

The list of possible deployment status name/code is given by:

OceanOPSDeployments().status_code

Status |

Id |

Description |

|---|---|---|

PROBABLE |

0 |

Starting status for some platforms, when there is only a few metadata available, like rough deployment location and date. The platform may be deployed |

CONFIRMED |

1 |

Automatically set when a ship is attached to the deployment information. The platform is ready to be deployed, deployment is planned |

REGISTERED |

2 |

Starting status for most of the networks, when deployment planning is not done. The deployment is certain, and a notification has been sent via the OceanOPS system |

OPERATIONAL |

6 |

Automatically set when the platform is emitting a pulse and observations are distributed within a certain time interval |

INACTIVE |

4 |

The platform is not emitting a pulse since a certain time |

CLOSED |

5 |

The platform is not emitting a pulse since a long time, it is considered as dead |

ADMT Documentation#

More than 20 pdf manuals have been produced by the Argo Data Management Team. Using the ArgoDocs class, it’s easy to navigate this great database.

If you don’t know where to start, you can simply list all available documents:

In [36]: from argopy import ArgoDocs

In [37]: ArgoDocs().list

Out[37]:

category ... id

0 Argo data formats ... 29825

1 Quality control ... 33951

2 Quality control ... 46542

3 Quality control ... 40879

4 Quality control ... 35385

5 Quality control ... 84370

6 Quality control ... 62466

7 Cookbooks ... 41151

8 Cookbooks ... 29824

9 Cookbooks ... 78994

10 Cookbooks ... 39795

11 Cookbooks ... 39459

12 Cookbooks ... 39468

13 Cookbooks ... 47998

14 Cookbooks ... 54541

15 Cookbooks ... 46121

16 Cookbooks ... 51541

17 Cookbooks ... 57195

18 Cookbooks ... 46120

19 Cookbooks ... 52154

20 Cookbooks ... 55637

21 Cookbooks ... 46202

22 Cookbooks ... 57195

23 Cookbooks ... 46121

24 Cookbooks ... 57195

25 Quality Control ... 97828

[26 rows x 4 columns]

Or search for a word in the title and/or abstract:

In [38]: results = ArgoDocs().search("oxygen")

In [39]: for docid in results:

....: print("\n", ArgoDocs(docid))

....:

<argopy.ArgoDocs>

Title: Argo quality control manual for dissolved oxygen concentration

DOI: 10.13155/46542

url: https://dx.doi.org/10.13155/46542

last pdf: https://archimer.ifremer.fr/doc/00354/46542/82301.pdf

Authors: Thierry, Virginie; Bittig, Henry

Abstract: This document is the Argo quality control manual for Dissolved oxygen concentration. It describes two levels of quality control: - The first level is the real-time system that performs a set of agreed automatic checks. Adjustment in real-time can also be performed and the real-time system can evaluate quality flags for adjusted fields. - The second level is the delayed-mode quality control system.

<argopy.ArgoDocs>

Title: Processing Argo oxygen data at the DAC level

DOI: 10.13155/39795

url: https://dx.doi.org/10.13155/39795

last pdf: https://archimer.ifremer.fr/doc/00287/39795/94062.pdf

Authors: THIERRY, Virginie; Bittig, Henry; GILBERT, Denis; KOBAYASHI, Taiyo; KANAKO, Sato; SCHMID, Claudia

Abstract: This document does NOT address the issue of oxygen data quality control (either real-time or delayed mode). As a preliminary step towards that goal, this document seeks to ensure that all countries deploying floats equipped with oxygen sensors document the data and metadata related to these floats properly. We produced this document in response to action item 14 from the AST-10 meeting in Hangzhou (March 22-23, 2009). Action item 14: Denis Gilbert to work with Taiyo Kobayashi and Virginie Thierry to ensure DACs are processing oxygen data according to recommendations. If the recommendations contained herein are followed, we will end up with a more uniform set of oxygen data within the Argo data system, allowing users to begin analysing not only their own oxygen data, but also those of others, in the true spirit of Argo data sharing. Indications provided in this document are valid as of the date of writing this document. It is very likely that changes in sensors, calibrations and conversions equations will occur in the future. Please contact V. Thierry (vthierry@ifremer.fr) for any inconsistencies or missing information. A dedicated webpage on the Argo Data Management website (www) contains all information regarding Argo oxygen data management : current and previous version of this cookbook, oxygen sensor manuals, calibration sheet examples, examples of matlab code to process oxygen data, test data, etc..

Then using the Argo doi number of a document, you can easily retrieve it:

In [40]: ArgoDocs(35385)

Out[40]:

<argopy.ArgoDocs>

Title: BGC-Argo quality control manual for the Chlorophyll-A concentration

DOI: 10.13155/35385

url: https://dx.doi.org/10.13155/35385

last pdf: https://archimer.ifremer.fr/doc/00243/35385/60181.pdf

Authors: SCHMECHTIG, Catherine; CLAUSTRE, Herve; POTEAU, Antoine; D'ORTENZIO, Fabrizio; Schallenberg, Christina; Trull, Thomas; Xing, Xiaogang

Abstract: This document is the BGC-Argo quality control manual for Chlorophyll A concentration. It describes the method used in real-time to apply quality control flags to Chlorophyll A concentration calculated from specific sensors mounted on Argo profiling floats.

and open it in your browser:

# ArgoDocs(35385).show()

# ArgoDocs(35385).open_pdf(page=12)