What’s New#

Coming up next#

Internals

v0.1.15 (12 Dec. 2023)#

Internals

Fix bug whereby user name could not be retrieved using

getpass.getuser(). This closes #310 and allows argopy to be integrated into the EU Galaxy tools for ecology. (#311) by G. Maze.

v0.1.14 (29 Sep. 2023)#

New in version v0.1.14: This new release brings to pip and conda default install of argopy all new features introduced in the release candidate v0.1.14rc2 and v0.1.14rc1. For simplicity we merged all novelties to this v0.1.14 changelog.

Features and front-end API

argopy now support BGC dataset in `expert` user mode for the `erddap` data source. The BGC-Argo content of synthetic multi-profile files is now available from the Ifremer erddap. Like for the core dataset, you can fetch data for a region, float(s) or profile(s). One novelty with regard to core, is that you can restrict data fetching to some parameters and furthermore impose no-NaNs on some of these parameters. Check out the new documentation page for Dataset. (#278) by G. Maze

import argopy

from argopy import DataFetcher

argopy.set_options(src='erddap', mode='expert')

DataFetcher(ds='bgc') # All variables found in the access point will be returned

DataFetcher(ds='bgc', params='all') # Default: All variables found in the access point will be returned

DataFetcher(ds='bgc', params='DOXY') # Only the DOXY variable will be returned

DataFetcher(ds='bgc', params=['DOXY', 'BBP700']) # Only DOXY and BBP700 will be returned

DataFetcher(ds='bgc', measured=None) # Default: all params are allowed to have NaNs

DataFetcher(ds='bgc', measured='all') # All params found in the access point cannot be NaNs

DataFetcher(ds='bgc', measured='DOXY') # Only DOXY cannot be NaNs

DataFetcher(ds='bgc', measured=['DOXY', 'BBP700']) # Only DOXY and BBP700 cannot be NaNs

DataFetcher(ds='bgc', params='all', measured=None) # Return the largest possible dataset

DataFetcher(ds='bgc', params='all', measured='all') # Return the smallest possible dataset

DataFetcher(ds='bgc', params='all', measured=['DOXY', 'BBP700']) # Return all possible params for points where DOXY and BBP700 are not NaN

New methods in the ArgoIndex for BGC. The

ArgoIndexhas now full support for the BGC profile index files, both bio and synthetic index. In particular it is possible to search for profiles with specific data modes on parameters. (#278) by G. Maze

from argopy import ArgoIndex

idx = ArgoIndex(index_file="bgc-b") # Use keywords instead of exact file names: `core`, `bgc-b`, `bgc-s`

idx.search_params(['C1PHASE_DOXY', 'DOWNWELLING_PAR']) # Search for profiles with parameters

idx.search_parameter_data_mode({'TEMP': 'D'}) # Search for profiles with specific data modes

idx.search_parameter_data_mode({'BBP700': 'D'})

idx.search_parameter_data_mode({'DOXY': ['R', 'A']})

idx.search_parameter_data_mode({'DOXY': 'D', 'CDOM': 'D'}, logical='or')

New xarray argo accessor features. Easily retrieve an Argo sample index and domain extent with the

indexanddomainproperties. Get a list with all possible (PLATFORM_NUMBER, CYCLE_NUMBER) with thelist_WMO_CYCmethod. (#278) by G. MazeNew search methods for Argo reference tables. It is now possible to search for a string in tables title and/or description using the

related.ArgoNVSReferenceTables.search()method.

from argopy import ArgoNVSReferenceTables

id_list = ArgoNVSReferenceTables().search('sensor')

Updated documentation. In order to better introduce new features, we updated the documentation structure and content.

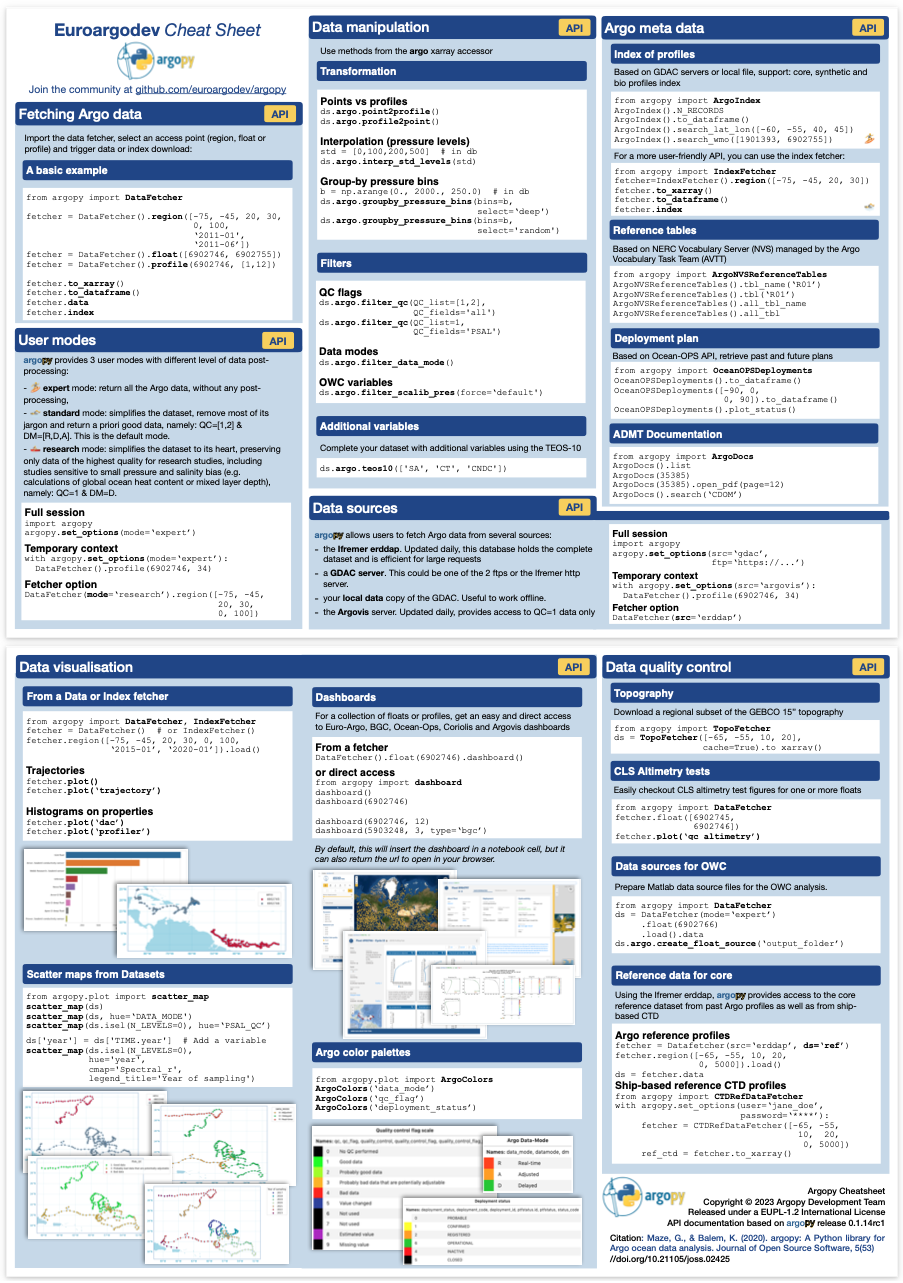

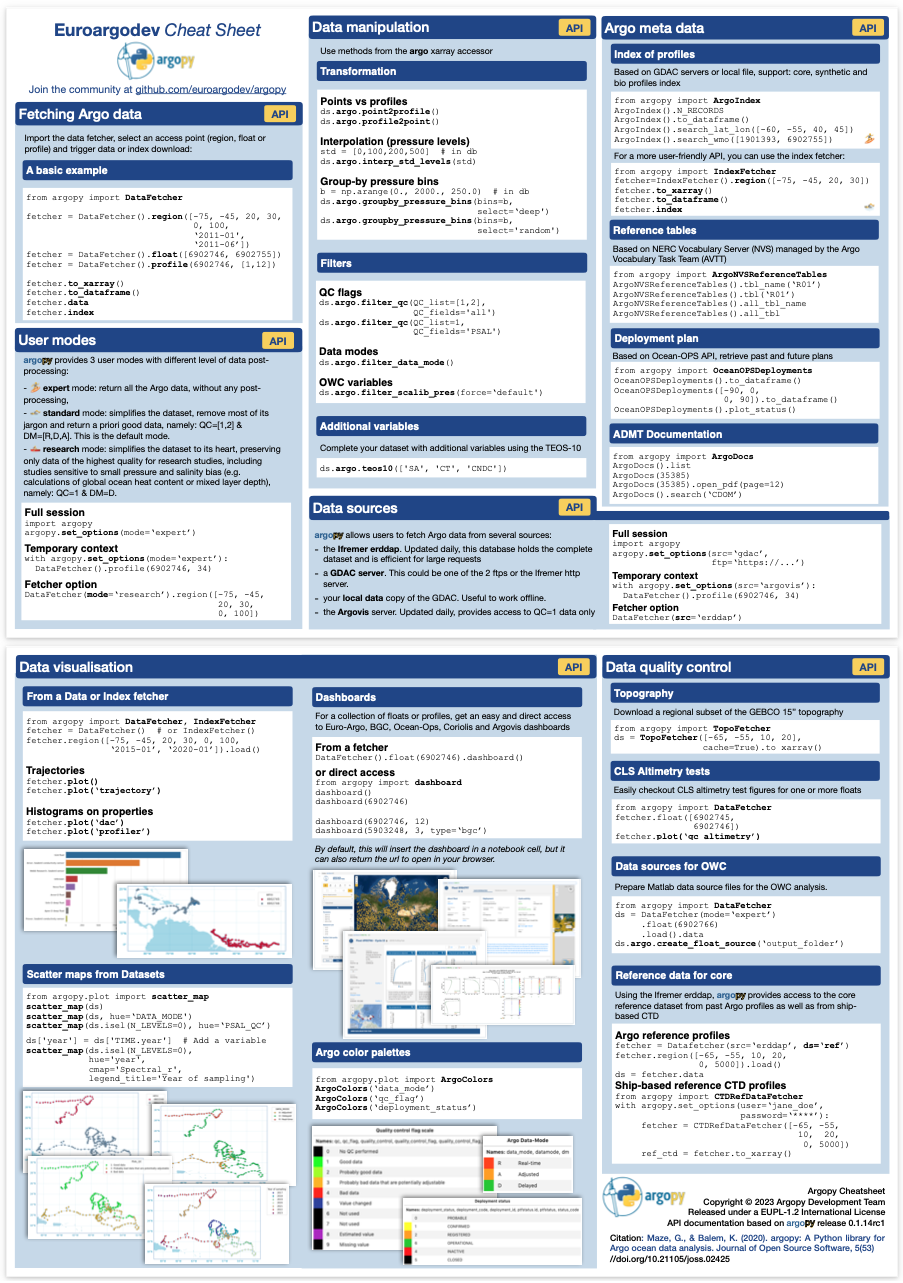

argopy cheatsheet ! Get most of the argopy API in a 2 pages pdf !

Our internal Argo index store is promoted as a frontend feature. The

IndexFetcheris a user-friendly fetcher built on top of our internal Argo index file store. But if you are familiar with Argo index files and/or cares about performances, you may be interested in using directly the Argo index store. We thus decided to promote this internal feature as a frontend classArgoIndex. See Store: Low-level Argo Index access. (#270) by G. MazeEasy access to all Argo manuals from the ADMT. More than 20 pdf manuals have been produced by the Argo Data Management Team. Using the new

ArgoDocsclass, it’s now easier to navigate this great database for Argo experts. All details in ADMT Documentation. (#268) by G. Maze

from argopy import ArgoDocs

ArgoDocs().list

ArgoDocs(35385)

ArgoDocs(35385).ris

ArgoDocs(35385).abstract

ArgoDocs(35385).show()

ArgoDocs(35385).open_pdf()

ArgoDocs(35385).open_pdf(page=12)

ArgoDocs().search("CDOM")

New ‘research’ user mode. This new feature implements automatic filtering of Argo data following international recommendations for research/climate studies. With this user mode, only Delayed Mode with good QC data are returned. Check out the User mode (🏄, 🏊, 🚣) section for all the details. (#265) by G. Maze

argopy now provides a specific xarray engine to properly read Argo netcdf files. Using

engine='argo'inxarray.open_dataset(), all variables will properly be casted, i.e. returned with their expected data types, which is not the case otherwise. This works with ALL Argo netcdf file types (as listed in the Reference table R01). Some details in here:argopy.xarray.ArgoEngine(#208) by G. Maze

import xarray as xr

ds = xr.open_dataset("dac/aoml/1901393/1901393_prof.nc", engine='argo')

argopy now can provide authenticated access to the Argo CTD reference database for DMQC. Using user/password new argopy options, it is possible to fetch the Argo CTD reference database, with the

CTDRefDataFetcherclass. (#256) by G. Maze

from argopy import CTDRefDataFetcher

with argopy.set_options(user="john_doe", password="***"):

f = CTDRefDataFetcher(box=[15, 30, -70, -60, 0, 5000.0])

ds = f.to_xarray()

Warning

argopy is ready but the Argo CTD reference database for DMQC is not fully published on the Ifremer ERDDAP yet. This new feature will thus be fully operational soon, and while it’s not, argopy should raise an ErddapHTTPNotFound error when using the new fetcher.

New option to control the expiration time of cache file:

cache_expiration.

Internals

Utilities refactoring. All classes and functions have been refactored to more appropriate locations like

argopy.utilsorargopy.related. A deprecation warning message should be displayed every time utilities are being used from the deprecated locations. (#290) by G. MazeFix bugs due to fsspec new internal cache handling and Windows specifics. (#293) by G. Maze

New utility class

utils.MonitoredThreadPoolExecutorto handle parallelization with a multi-threading Pool that provide a notebook or terminal computation progress dashboard. This class is used by the httpstore open_mfdataset method for erddap requests.New utilites to handle a collection of datasets:

utils.drop_variables_not_in_all_datasets()will drop variables that are not in all datasets (the lowest common denominator) andutils.fill_variables_not_in_all_datasets()will add empty variables to dataset so that all the collection have the same data_vars and coords. These functions are used by stores to concat/merge a collection of datasets (chunks).related.load_dict()now relies onArgoNVSReferenceTablesinstead of static pickle files.argopy.ArgoColorscolormap for Argo Data-Mode has now a fourth value to account for a white space FillValue.New quick and dirty plot method

plot.scatter_plot()Refactor pickle files in argopy/assets as json files in argopy/static/assets

Refactor list of variables by data types used in

related.cast_Argo_variable_type()into assets json files in argopy/static/assetsChange of behaviour: when setting the cachedir option, path it’s not tested for existence but for being writable, and is created if doesn’t exists (but seems to break CI upstream in Windows)

And misc. bug and warning fixes all over the code.

Update new argovis dashboard links for floats and profiles. (#271) by G. Maze

Index store can now export search results to standard Argo index file format. See all details in Store: Low-level Argo Index access. (#260) by G. Maze

from argopy import ArgoIndex as indexstore

# or:

# from argopy.stores import indexstore_pd as indexstore

# or:

# from argopy.stores import indexstore_pa as indexstore

idx = indexstore().search_wmo(3902131) # Perform any search

idx.to_indexfile('short_index.txt') # export search results as standard Argo index csv file

Index store can now load/search the Argo Bio and Synthetic profile index files. Simply gives the name of the Bio or Synthetic Profile index file and retrieve the full index. This store also comes with a new search criteria for BGC: by parameters. See all details in Store: Low-level Argo Index access. (#261) by G. Maze

from argopy import ArgoIndex as indexstore

# or:

# from argopy.stores import indexstore_pd as indexstore

# or:

# from argopy.stores import indexstore_pa as indexstore

idx = indexstore(index_file="argo_bio-profile_index.txt").load()

idx.search_params(['C1PHASE_DOXY', 'DOWNWELLING_PAR'])

Use a mocked server for all http and GDAC ftp requests in CI tests (#249, #252, #255) by G. Maze

Removed support for minimal dependency requirements and for python 3.7. (#252) by G. Maze

Changed License from Apache to EUPL 1.2

Breaking changes

v0.1.14rc2 (27 Jul. 2023)#

Features and front-end API

argopy now support BGC dataset in `expert` user mode for the `erddap` data source. The BGC-Argo content of synthetic multi-profile files is now available from the Ifremer erddap. Like for the core dataset, you can fetch data for a region, float(s) or profile(s). One novelty with regard to core, is that you can restrict data fetching to some parameters and furthermore impose no-NaNs on some of these parameters. Check out the new documentation page for Dataset. (#278) by G. Maze

import argopy

from argopy import DataFetcher

argopy.set_options(src='erddap', mode='expert')

DataFetcher(ds='bgc') # All variables found in the access point will be returned

DataFetcher(ds='bgc', params='all') # Default: All variables found in the access point will be returned

DataFetcher(ds='bgc', params='DOXY') # Only the DOXY variable will be returned

DataFetcher(ds='bgc', params=['DOXY', 'BBP700']) # Only DOXY and BBP700 will be returned

DataFetcher(ds='bgc', measured=None) # Default: all params are allowed to have NaNs

DataFetcher(ds='bgc', measured='all') # All params found in the access point cannot be NaNs

DataFetcher(ds='bgc', measured='DOXY') # Only DOXY cannot be NaNs

DataFetcher(ds='bgc', measured=['DOXY', 'BBP700']) # Only DOXY and BBP700 cannot be NaNs

DataFetcher(ds='bgc', params='all', measured=None) # Return the largest possible dataset

DataFetcher(ds='bgc', params='all', measured='all') # Return the smallest possible dataset

DataFetcher(ds='bgc', params='all', measured=['DOXY', 'BBP700']) # Return all possible params for points where DOXY and BBP700 are not NaN

New methods in the ArgoIndex for BGC. The

ArgoIndexhas now full support for the BGC profile index files, both bio and synthetic index. In particular it is possible to search for profiles with specific data modes on parameters. (#278) by G. Maze

from argopy import ArgoIndex

idx = ArgoIndex(index_file="bgc-b") # Use keywords instead of exact file names: `core`, `bgc-b`, `bgc-s`

idx.search_params(['C1PHASE_DOXY', 'DOWNWELLING_PAR']) # Search for profiles with parameters

idx.search_parameter_data_mode({'TEMP': 'D'}) # Search for profiles with specific data modes

idx.search_parameter_data_mode({'BBP700': 'D'})

idx.search_parameter_data_mode({'DOXY': ['R', 'A']})

idx.search_parameter_data_mode({'DOXY': 'D', 'CDOM': 'D'}, logical='or')

New xarray argo accessor features. Easily retrieve an Argo sample index and domain extent with the

indexanddomainproperties. Get a list with all possible (PLATFORM_NUMBER, CYCLE_NUMBER) with thelist_WMO_CYCmethod. (#278) by G. MazeNew search methods for Argo reference tables. It is now possible to search for a string in tables title and/or description using the

related.ArgoNVSReferenceTables.search()method.

from argopy import ArgoNVSReferenceTables

id_list = ArgoNVSReferenceTables().search('sensor')

Updated documentation. In order to better introduce new features, we updated the documentation structure and content.

Internals

New utility class

utils.MonitoredThreadPoolExecutorto handle parallelization with a multi-threading Pool that provide a notebook or terminal computation progress dashboard. This class is used by the httpstore open_mfdataset method for erddap requests.New utilites to handle a collection of datasets:

utils.drop_variables_not_in_all_datasets()will drop variables that are not in all datasets (the lowest common denominator) andutils.fill_variables_not_in_all_datasets()will add empty variables to dataset so that all the collection have the same data_vars and coords. These functions are used by stores to concat/merge a collection of datasets (chunks).related.load_dict()now relies onArgoNVSReferenceTablesinstead of static pickle files.argopy.ArgoColorscolormap for Argo Data-Mode has now a fourth value to account for a white space FillValue.New quick and dirty plot method

plot.scatter_plot()Refactor pickle files in argopy/assets as json files in argopy/static/assets

Refactor list of variables by data types used in

related.cast_Argo_variable_type()into assets json files in argopy/static/assetsChange of behaviour: when setting the cachedir option, path it’s not tested for existence but for being writable, and is created if doesn’t exists (but seems to break CI upstream in Windows)

And misc. bug and warning fixes all over the code.

Breaking changes

Some documentation pages may have moved to new urls.

v0.1.14rc1 (31 May 2023)#

Features and front-end API

argopy cheatsheet ! Get most of the argopy API in a 2 pages pdf !

Our internal Argo index store is promoted as a frontend feature. The

IndexFetcheris a user-friendly fetcher built on top of our internal Argo index file store. But if you are familiar with Argo index files and/or cares about performances, you may be interested in using directly the Argo index store. We thus decided to promote this internal feature as a frontend classArgoIndex. See Store: Low-level Argo Index access. (#270) by G. MazeEasy access to all Argo manuals from the ADMT. More than 20 pdf manuals have been produced by the Argo Data Management Team. Using the new

ArgoDocsclass, it’s now easier to navigate this great database for Argo experts. All details in ADMT Documentation. (#268) by G. Maze

from argopy import ArgoDocs

ArgoDocs().list

ArgoDocs(35385)

ArgoDocs(35385).ris

ArgoDocs(35385).abstract

ArgoDocs(35385).show()

ArgoDocs(35385).open_pdf()

ArgoDocs(35385).open_pdf(page=12)

ArgoDocs().search("CDOM")

New ‘research’ user mode. This new feature implements automatic filtering of Argo data following international recommendations for research/climate studies. With this user mode, only Delayed Mode with good QC data are returned. Check out the User mode (🏄, 🏊, 🚣) section for all the details. (#265) by G. Maze

argopy now provides a specific xarray engine to properly read Argo netcdf files. Using

engine='argo'inxarray.open_dataset(), all variables will properly be casted, i.e. returned with their expected data types, which is not the case otherwise. This works with ALL Argo netcdf file types (as listed in the Reference table R01). Some details in here:argopy.xarray.ArgoEngine(#208) by G. Maze

import xarray as xr

ds = xr.open_dataset("dac/aoml/1901393/1901393_prof.nc", engine='argo')

argopy now can provide authenticated access to the Argo CTD reference database for DMQC. Using user/password new argopy options, it is possible to fetch the Argo CTD reference database, with the

CTDRefDataFetcherclass. (#256) by G. Maze

from argopy import CTDRefDataFetcher

with argopy.set_options(user="john_doe", password="***"):

f = CTDRefDataFetcher(box=[15, 30, -70, -60, 0, 5000.0])

ds = f.to_xarray()

Warning

argopy is ready but the Argo CTD reference database for DMQC is not fully published on the Ifremer ERDDAP yet. This new feature will thus be fully operational soon, and while it’s not, argopy should raise an ErddapHTTPNotFound error when using the new fetcher.

New option to control the expiration time of cache file:

cache_expiration.

Internals

Update new argovis dashboard links for floats and profiles. (#271) by G. Maze

Index store can now export search results to standard Argo index file format. See all details in Store: Low-level Argo Index access. (#260) by G. Maze

from argopy import ArgoIndex as indexstore

# or:

# from argopy.stores import indexstore_pd as indexstore

# or:

# from argopy.stores import indexstore_pa as indexstore

idx = indexstore().search_wmo(3902131) # Perform any search

idx.to_indexfile('short_index.txt') # export search results as standard Argo index csv file

Index store can now load/search the Argo Bio and Synthetic profile index files. Simply gives the name of the Bio or Synthetic Profile index file and retrieve the full index. This store also comes with a new search criteria for BGC: by parameters. See all details in Store: Low-level Argo Index access. (#261) by G. Maze

from argopy import ArgoIndex as indexstore

# or:

# from argopy.stores import indexstore_pd as indexstore

# or:

# from argopy.stores import indexstore_pa as indexstore

idx = indexstore(index_file="argo_bio-profile_index.txt").load()

idx.search_params(['C1PHASE_DOXY', 'DOWNWELLING_PAR'])

Use a mocked server for all http and GDAC ftp requests in CI tests (#249, #252, #255) by G. Maze

Removed support for minimal dependency requirements and for python 3.7. (#252) by G. Maze

Changed License from Apache to EUPL 1.2

Breaking changes

v0.1.13 (28 Mar. 2023)#

Features and front-end API

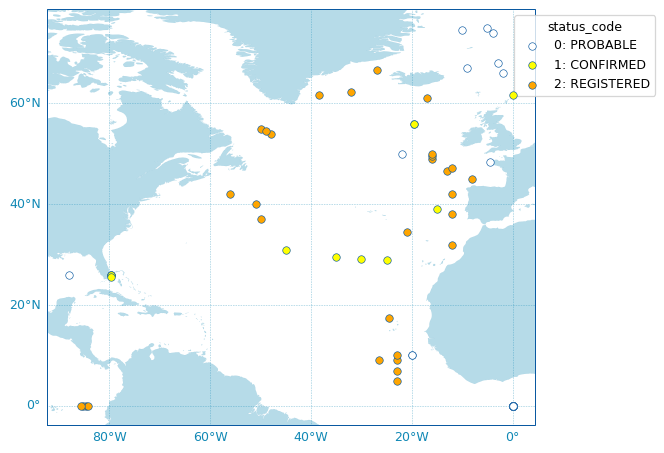

New utility class to retrieve the Argo deployment plan from the Ocean-OPS api. This is the utility class

OceanOPSDeployments. See the new documentation section on Deployment Plan for more. (#244) by G. Maze

from argopy import OceanOPSDeployments

deployment = OceanOPSDeployments()

deployment = OceanOPSDeployments([-90,0,0,90])

deployment = OceanOPSDeployments([-90,0,0,90], deployed_only=True) # Remove planification

df = deployment.to_dataframe()

deployment.status_code

fig, ax = deployment.plot_status()

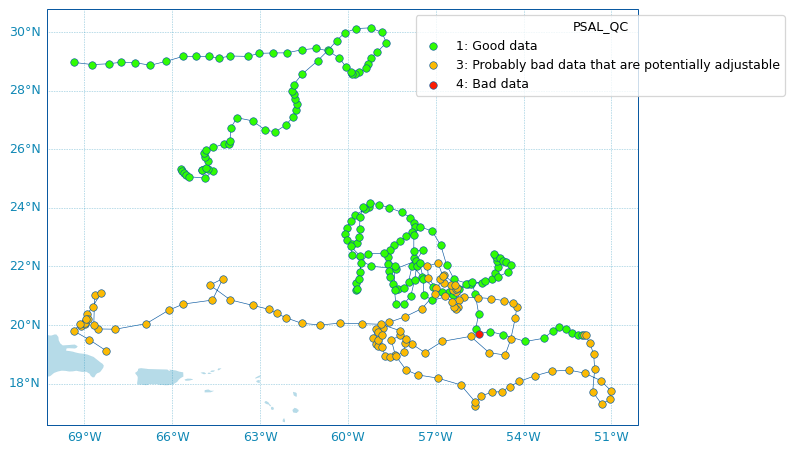

New scatter map utility for easy Argo-related variables plotting. The new

argopy.plot.scatter_map()utility function is dedicated to making maps with Argo profiles positions coloured according to specific variables: a scatter map. Profiles colouring is finely tuned for some variables: QC flags, Data Mode and Deployment Status. By default, floats trajectories are always shown, but this can be changed. See the new documentation section on Scatter Maps for more. (#245) by G. Maze

from argopy.plot import scatter_map

fig, ax = scatter_map(ds_or_df,

x='LONGITUDE', y='LATITUDE', hue='PSAL_QC',

traj_axis='PLATFORM_NUMBER')

New Argo colors utility to manage segmented colormaps and pre-defined Argo colors set. The new

argopy.plot.ArgoColorsutility class aims to easily provide colors for Argo-related variables plot. See the new documentation section on Argo colors for more (#245) by G. Maze

from argopy.plot import ArgoColors

ArgoColors().list_valid_known_colormaps

ArgoColors().known_colormaps.keys()

ArgoColors('data_mode')

ArgoColors('data_mode').cmap

ArgoColors('data_mode').definition

ArgoColors('Set2').cmap

ArgoColors('Spectral', N=25).cmap

Internals

Because of the new

argopy.plot.ArgoColors, the argopy.plot.discrete_coloring utility is deprecated in 0.1.13. Calling it will raise an error after argopy 0.1.14. (#245) by G. MazeNew method to check status of web API: now allows for a keyword check rather than a simple url ping. This comes with 2 new utilities functions

utilities.urlhaskeyword()andutilities.isalive(). (#247) by G. Maze.Removed dependency to Scikit-learn LabelEncoder (#239) by G. Maze

Breaking changes

Data source

localftpis deprecated and removed from argopy. It’s been replaced by thegdacdata source with the appropriateftpoption. See Data sources. (#240) by G. Maze

Breaking changes with previous versions

argopy.utilities.ArgoNVSReferenceTablesmethodsall_tblandall_tbl_nameare now properties, not methods.

v0.1.12 (16 May 2022)#

Internals

Update

erddapserver from https://www.ifremer.fr/erddap to https://erddap.ifremer.fr/erddap. (@af5692f) by G. Maze

v0.1.11 (13 Apr. 2022)#

Features and front-end API

New data source ``gdac`` to retrieve data from a GDAC compliant source, for DataFetcher and IndexFetcher. You can specify the FTP source with the

ftpfetcher option or with the argopy global optionftp. The FTP source support http, ftp or local files protocols. This fetcher is optimised if pyarrow is available, otherwise pandas dataframe are used. See update on Data sources. (#157) by G. Maze

from argopy import IndexFetcher

from argopy import DataFetcher

argo = IndexFetcher(src='gdac')

argo = DataFetcher(src='gdac')

argo = DataFetcher(src='gdac', ftp="https://data-argo.ifremer.fr") # Default and fastest !

argo = DataFetcher(src='gdac', ftp="ftp://ftp.ifremer.fr/ifremer/argo")

with argopy.set_options(src='gdac', ftp='ftp://usgodae.org/pub/outgoing/argo'):

argo = DataFetcher()

Note

The new gdac fetcher uses Argo index to determine which profile files to load. Hence, this fetcher may show poor performances when used with a region access point. Don’t hesitate to check Performances to try to improve performances, otherwise, we recommend to use a webAPI access point (erddap or argovis).

Warning

Since the new gdac fetcher can use a local copy of the GDAC ftp server, the legacy localftp fetcher is now deprecated.

Using it will raise a error up to v0.1.12. It will then be removed in v0.1.13.

New dashboard for profiles and new 3rd party dashboards. Calling on the data fetcher dashboard method will return the Euro-Argo profile page for a single profile. Very useful to look at the data before load. This comes with 2 new utilities functions to get Coriolis ID of profiles (

utilities.get_coriolis_profile_id()) and to return the list of profile webpages (utilities.get_ea_profile_page()). (#198) by G. Maze.

from argopy import DataFetcher as ArgoDataFetcher

ArgoDataFetcher().profile(5904797, 11).dashboard()

from argopy.utilities import get_coriolis_profile_id, get_ea_profile_page

get_coriolis_profile_id([6902755, 6902756], [11, 12])

get_ea_profile_page([6902755, 6902756], [11, 12])

The new profile dashboard can also be accessed with:

import argopy

argopy.dashboard(5904797, 11)

We added the Ocean-OPS (former JCOMMOPS) dashboard for all floats and the Argo-BGC dashboard for BGC floats:

import argopy

argopy.dashboard(5904797, type='ocean-ops')

# or

argopy.dashboard(5904797, 12, type='bgc')

New utility :class:`argopy.utilities.ArgoNVSReferenceTables` to retrieve Argo Reference Tables. (@cc8fdbe) by G. Maze.

from argopy.utilities import ArgoNVSReferenceTables

R = ArgoNVSReferenceTables()

R.all_tbl_name()

R.tbl(3)

R.tbl('R09')

Internals

from argopy import DataFetcher

argo = DataFetcher(src='gdac').float(6903076)

argo.index

New index store design. A new index store is used by data and index

gdacfetchers to handle access and search in Argo index csv files. It uses pyarrow table if available or pandas dataframe otherwise. More details at Argo index store. Directly using this index store is not recommended but provides better performances for expert users interested in Argo sampling analysis.

from argopy.stores.argo_index_pa import indexstore_pyarrow as indexstore

idx = indexstore(host="https://data-argo.ifremer.fr", index_file="ar_index_global_prof.txt") # Default

idx.load()

idx.search_lat_lon_tim([-60, -55, 40., 45., '2007-08-01', '2007-09-01'])

idx.N_MATCH # Return number of search results

idx.to_dataframe() # Convert search results to a dataframe

Refactoring of CI tests to use more fixtures and pytest parametrize. (#157) by G. Maze

Fix bug in erddap fata fetcher that was causing a profile request to do not account for cycle numbers. (@301e557) by G. Maze.

Breaking changes

Index fetcher for local FTP no longer support the option

index_file. The name of the file index is internally determined using the dataset requested:ar_index_global_prof.txtfords='phy'andargo_synthetic-profile_index.txtfords='bgc'. Using this option will raise a deprecation warning up to v0.1.12 and will then raise an error. (#157) by G. MazeComplete refactoring of the

argopy.plottersmodule intoargopy.plot. (#198) by G. Maze.Remove deprecation warnings for: ‘plotters.plot_dac’, ‘plotters.plot_profilerType’. These now raise an error.

v0.1.10 (4 Mar. 2022)#

Internals

Update and clean up requirements. Remove upper bound on all dependencies (#182) by R. Abernathey.

v0.1.9 (19 Jan. 2022)#

Features and front-end API

New method to preprocess data for OWC software. This method can preprocessed Argo data and possibly create float_source/<WMO>.mat files to be used as inputs for OWC implementations in Matlab and Python. See the Salinity calibration documentation page for more. (#142) by G. Maze.

from argopy import DataFetcher as ArgoDataFetcher

ds = ArgoDataFetcher(mode='expert').float(6902766).load().data

ds.argo.create_float_source("float_source")

ds.argo.create_float_source("float_source", force='raw')

ds_source = ds.argo.create_float_source()

This new method comes with others methods and improvements:

A new

Dataset.argo.filter_scalib_pres()method to filter variables according to OWC salinity calibration software requirements,A new

Dataset.argo.groupby_pressure_bins()method to subsample a dataset down to one value by pressure bins (a perfect alternative to interpolation on standard depth levels to precisely avoid interpolation…), see Pressure levels: Group-by bins for more help,An improved

Dataset.argo.filter_qc()method to select which fields to consider (new optionQC_fields),Add conductivity (

CNDC) to the possible output of theTEOS10method.

New dataset properties accessible from the argo xarray accessor:

N_POINTS,N_LEVELS,N_PROF. Note that depending on the format of the dataset (a collection of points or of profiles) these values do or do not take into account NaN. These information are also visible by a simple print of the accessor. (#142) by G. Maze.

from argopy import DataFetcher as ArgoDataFetcher

ds = ArgoDataFetcher(mode='expert').float(6902766).load().data

ds.argo.N_POINTS

ds.argo.N_LEVELS

ds.argo.N_PROF

ds.argo

New plotter function

argopy.plotters.open_sat_altim_report()to insert the CLS Satellite Altimeter Report figure in a notebook cell. (#159) by G. Maze.

from argopy.plotters import open_sat_altim_report

open_sat_altim_report(6902766)

open_sat_altim_report([6902766, 6902772, 6902914])

open_sat_altim_report([6902766, 6902772, 6902914], embed='dropdown') # Default

open_sat_altim_report([6902766, 6902772, 6902914], embed='slide')

open_sat_altim_report([6902766, 6902772, 6902914], embed='list')

open_sat_altim_report([6902766, 6902772, 6902914], embed=None)

from argopy import DataFetcher

from argopy import IndexFetcher

DataFetcher().float([6902745, 6902746]).plot('qc_altimetry')

IndexFetcher().float([6902745, 6902746]).plot('qc_altimetry')

New utility method to retrieve topography. The

argopy.TopoFetcherwill load the GEBCO topography for a given region. (#150) by G. Maze.

from argopy import TopoFetcher

box = [-75, -45, 20, 30]

ds = TopoFetcher(box).to_xarray()

ds = TopoFetcher(box, ds='gebco', stride=[10, 10], cache=True).to_xarray()

For convenience we also added a new property to the data fetcher that return the domain covered by the dataset.

loader = ArgoDataFetcher().float(2901623)

loader.domain # Returns [89.093, 96.036, -0.278, 4.16, 15.0, 2026.0, numpy.datetime64('2010-05-14T03:35:00.000000000'), numpy.datetime64('2013-01-01T01:45:00.000000000')]

Update the documentation with a new section about Data quality control.

Internals

Uses a new API endpoint for the

argovisdata source when fetching aregion. More on this issue here. (#158) by G. Maze.Update documentation theme, and pages now use the xarray accessor sphinx extension. (#104) by G. Maze.

Update Binder links to work without the deprecated Pangeo-Binder service. (#164) by G. Maze.

v0.1.8 (2 Nov. 2021)#

Features and front-end API

Improve plotting functions. All functions are now available for both the index and data fetchers. See the Data visualisation page for more details. Reduced plotting dependencies to Matplotlib only. Argopy will use Seaborn and/or Cartopy if available. (#56) by G. Maze.

from argopy import IndexFetcher as ArgoIndexFetcher

from argopy import DataFetcher as ArgoDataFetcher

obj = ArgoIndexFetcher().float([6902766, 6902772, 6902914, 6902746])

# OR

obj = ArgoDataFetcher().float([6902766, 6902772, 6902914, 6902746])

fig, ax = obj.plot()

fig, ax = obj.plot('trajectory')

fig, ax = obj.plot('trajectory', style='white', palette='Set1', figsize=(10,6))

fig, ax = obj.plot('dac')

fig, ax = obj.plot('institution')

fig, ax = obj.plot('profiler')

New methods and properties for data and index fetchers. (#56) by G. Maze. The

argopy.DataFetcher.load()andargopy.IndexFetcher.load()methods internally call on the to_xarray() methods and store results in the fetcher instance. Theargopy.DataFetcher.to_xarray()will trigger a fetch on every call, while theargopy.DataFetcher.load()will not.

from argopy import DataFetcher as ArgoDataFetcher

loader = ArgoDataFetcher().float([6902766, 6902772, 6902914, 6902746])

loader.load()

loader.data

loader.index

loader.to_index()

from argopy import IndexFetcher as ArgoIndexFetcher

indexer = ArgoIndexFetcher().float([6902766, 6902772])

indexer.load()

indexer.index

Add optional speed of sound computation to xarray accessor teos10 method. (#90) by G. Maze.

Code spell fixes (#89) by K. Schwehr.

Internals

Check validity of access points options (WMO and box) in the facade, no checks at the fetcher level. (#92) by G. Maze.

More general options. Fix #91. (#102) by G. Maze.

trust_envto allow for local environment variables to be used by fsspec to connect to the internet. Useful for those using a proxy.

Documentation on Read The Docs now uses a pip environment and get rid of memory eager conda. (#103) by G. Maze.

xarray.Datasetargopy accessorargohas a clean documentation.

Breaking changes with previous versions

Drop support for python 3.6 and older. Lock range of dependencies version support.

In the plotters module, the

plot_dacandplot_profilerTypefunctions have been replaced bybar_plot. (#56) by G. Maze.

Internals

Internal logging available and upgrade dependencies version support (#56) by G. Maze. To see internal logs, you can set-up your application like this:

import logging

DEBUGFORMATTER = '%(asctime)s [%(levelname)s] [%(name)s] %(filename)s:%(lineno)d: %(message)s'

logging.basicConfig(

level=logging.DEBUG,

format=DEBUGFORMATTER,

datefmt='%m/%d/%Y %I:%M:%S %p',

handlers=[logging.FileHandler("argopy.log", mode='w')]

)

v0.1.7 (4 Jan. 2021)#

Long due release !

Features and front-end API

Live monitor for the status (availability) of data sources. See documentation page on Status of sources. (#36) by G. Maze.

import argopy

argopy.status()

# or

argopy.status(refresh=15)

Optimise large data fetching with parallelization, for all data fetchers (erddap, localftp and argovis). See documentation page on Parallel data fetching. Two parallel methods are available: multi-threading or multi-processing. (#28) by G. Maze.

from argopy import DataFetcher as ArgoDataFetcher

loader = ArgoDataFetcher(parallel=True)

loader.float([6902766, 6902772, 6902914, 6902746]).to_xarray()

loader.region([-85,-45,10.,20.,0,1000.,'2012-01','2012-02']).to_xarray()

Breaking changes with previous versions

In the teos10 xarray accessor, the

standard_nameattribute will now be populated using values from the CF Standard Name table if one exists. The previous values ofstandard_namehave been moved to thelong_nameattribute. (#74) by A. Barna.The unique resource identifier property is now named

urifor all data fetchers, it is always a list of strings.

Internals

New

open_mfdatasetandopen_mfjsonmethods in Argo stores. These can be used to open, pre-process and concatenate a collection of paths both in sequential or parallel order. (#28) by G. Maze.Unit testing is now done on a controlled conda environment. This allows to more easily identify errors coming from development vs errors due to dependencies update. (#65) by G. Maze.

v0.1.6 (31 Aug. 2020)#

JOSS paper published. You can now cite argopy with a clean reference. (#30) by G. Maze and K. Balem.

Maze G. and Balem K. (2020). argopy: A Python library for Argo ocean data analysis. Journal of Open Source Software, 5(52), 2425 doi: 10.21105/joss.02425.

v0.1.5 (10 July 2020)#

Features and front-end API

A new data source with the argovis data fetcher, all access points available (#24). By T. Tucker and G. Maze.

from argopy import DataFetcher as ArgoDataFetcher

loader = ArgoDataFetcher(src='argovis')

loader.float(6902746).to_xarray()

loader.profile(6902746, 12).to_xarray()

loader.region([-85,-45,10.,20.,0,1000.,'2012-01','2012-02']).to_xarray()

Easily compute TEOS-10 variables with new argo accessor function teos10. This needs gsw to be installed. (#37) By G. Maze.

from argopy import DataFetcher as ArgoDataFetcher

ds = ArgoDataFetcher().region([-85,-45,10.,20.,0,1000.,'2012-01','2012-02']).to_xarray()

ds = ds.argo.teos10()

ds = ds.argo.teos10(['PV'])

ds_teos10 = ds.argo.teos10(['SA', 'CT'], inplace=False)

argopy can now be installed with conda (#29, #31, #32). By F. Fernandes.

conda install -c conda-forge argopy

Breaking changes with previous versions

The

local_ftpoption of thelocalftpdata source must now points to the folder where thedacdirectory is found. This breaks compatibility with rsynced local FTP copy because rsync does not give adacfolder (e.g. #33). An instructive error message is raised to notify users if any of the DAC name is found at the n-1 path level. (#34).

Internals

Implement a webAPI availability check in unit testing. This allows for more robust

erddapandargovistests that are not only based on internet connectivity only. (@5a46a39).

v0.1.4 (24 June 2020)#

Features and front-end API

ds = ArgoDataFetcher().region([-85,-45,10.,20.,0,1000.,'2012-01','2012-12']).to_xarray()

ds = ds.argo.point2profile()

ds_interp = ds.argo.interp_std_levels(np.arange(0,900,50))

Insert in a Jupyter notebook cell the Euro-Argo fleet monitoring dashboard page, possibly for a specific float (#20). By G. Maze.

import argopy

argopy.dashboard()

# or

argopy.dashboard(wmo=6902746)

The

localftpindex and data fetcher now have theregionandprofileaccess points available (#25). By G. Maze.

Breaking changes with previous versions

[None]

Internals

Now uses fsspec as file system for caching as well as accessing local and remote files (#19). This closes issues #12, #15 and #17. argopy fetchers must now use (or implement if necessary) one of the internal file systems available in the new module

argopy.stores. By G. Maze.Erddap fetcher now uses netcdf format to retrieve data (#19).

v0.1.3 (15 May 2020)#

Features and front-end API

from argopy import IndexFetcher as ArgoIndexFetcher

idx = ArgoIndexFetcher().float(6902746)

idx.to_dataframe()

idx.plot('trajectory')

The index fetcher can manage caching and works with both Erddap and localftp data sources. It is basically the same as the data fetcher, but do not load measurements, only meta-data. This can be very useful when looking for regional sampling or trajectories.

Tip

Performance: we recommend to use the localftp data source when working this index fetcher because the erddap data source currently suffers from poor performances. This is linked to #16 and is being addressed by Ifremer.

The index fetcher comes with basic plotting functionalities with the argopy.IndexFetcher.plot() method to rapidly visualise measurement distributions by DAC, latitude/longitude and floats type.

Warning

The design of plotting and visualisation features in argopy is constantly evolving, so this may change in future releases.

The

argopy.DataFetchernow has aargopy.DataFetcher.to_dataframe()method to return apandas.DataFrame.New utilities function:

argopy.utilities.open_etopo1(),argopy.show_versions().

Breaking changes with previous versions

The

backendoption in data fetchers and the global optiondatasrchave been renamed tosrc. This makes the code more coherent (@ec6b32e).

Code management

v0.1.2 (15 May 2020)#

We didn’t like this one this morning, so we move one to the next one !

v0.1.1 (3 Apr. 2020)#

Features and front-end API

Added new data fetcher backend

localftpin DataFetcher (@c5f7cb6):

from argopy import DataFetcher as ArgoDataFetcher

argo_loader = ArgoDataFetcher(backend='localftp', path_ftp='/data/Argo/ftp_copy')

argo_loader.float(6902746).to_xarray()

Introduced global

OPTIONSto set values for: cache folder, dataset (eg:phy or bgc), local ftp path, data fetcher (erddap or localftp) and user level (standard or expert). Can be used in context with (@83ccfb5):

with argopy.set_options(mode='expert', datasrc='erddap'):

ds = argopy.DataFetcher().float(3901530).to_xarray()

Added a

argopy.tutorialmodule to be able to load sample data for documentation and unit testing (@4af09b5):

ftproot, flist = argopy.tutorial.open_dataset('localftp')

txtfile = argopy.tutorial.open_dataset('weekly_index_prof')

Improved xarray argo accessor. Added methods for casting data types, to filter variables according to data mode, to filter variables according to quality flags. Useful methods to transform collection of points into collection of profiles, and vice versa (@14cda55):

ds = argopy.DataFetcher().float(3901530).to_xarray() # get a collection of points

dsprof = ds.argo.point2profile() # transform to profiles

ds = dsprof.argo.profile2point() # transform to points

Changed License from MIT to Apache (@25f90c9)

Internal machinery

Add

__all__to controlfrom argopy import *(@83ccfb5)All data fetchers inherit from class

ArgoDataFetcherProtoinproto.py(@44f45a5)Data fetchers use default options from global OPTIONS

In Erddap fetcher: methods to cast data type, to filter by data mode and by QC flags are now delegated to the xarray argo accessor methods.

Data fetchers methods to filter variables according to user mode are using variable lists defined in utilities.

argopy.utilitiesaugmented with listing functions of: backends, standard variables and multiprofile files variables.Introduce custom errors in errors.py (@2563c9f)

Front-end API ArgoDataFetcher uses a more general way of auto-discovering fetcher backend and their access points. Turned of the

deploymentsaccess point, waiting for the index fetcher to do that.Improved xarray argo accessor. More reliable

point2profileand data type casting withcast_type

Code management

v0.1.0 (17 Mar. 2020)#

Initial release.

Erddap data fetcher